Choose the Right Analyzer for Your Use Case

This guide focuses on practical decision-making for analyzer selection. For technical details about analyzer components and how to add analyzer parameters, refer to Analyzer Overview.

Understand analyzers in 2 minutes

In Milvus, an analyzer processes the text stored in this field to make it searchable for features like full text search (BM25), phrase match, or text match. Think of it as a text processor that transforms your raw content into searchable tokens.

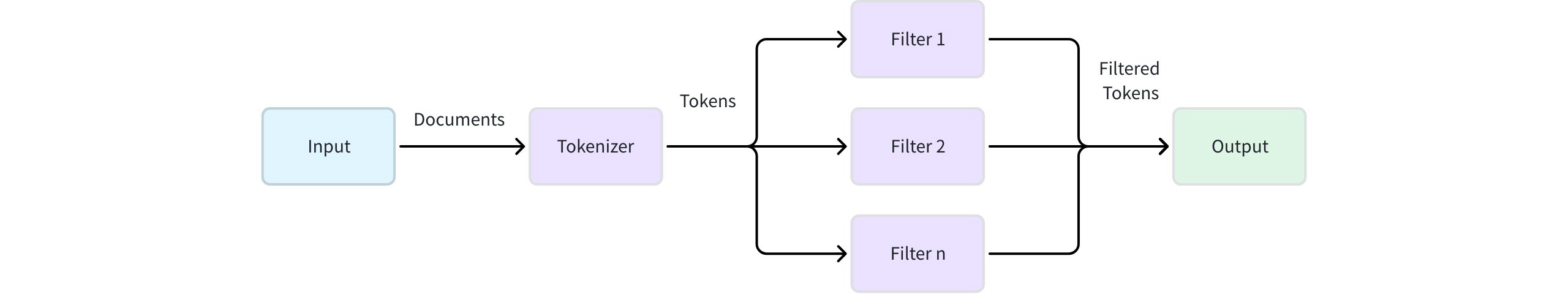

An analyzer works in a simple, two-stage pipeline:

Analyzer Workflow

Analyzer Workflow

Tokenization (required): This initial stage applies a tokenizer to break a continuous string of text into discrete, meaningful units called tokens. The tokenization method can vary significantly depending on the language and content type.

Token filtering (optional): After tokenization, filters are applied to modify, remove, or refine the tokens. These operations can include converting all tokens to lowercase, removing common meaningless words (such as stopwords), or reducing words to their root form (stemming).

Example:

Input: "Hello World!"

1. Tokenization → ["Hello", "World", "!"]

2. Lowercase & Punctuation Filtering → ["hello", "world"]

Why the choice of analyzer matters

Choosing the wrong analyzer can make relevant documents unsearchable or return irrelevant results.

The following table summarizes common problems caused by improper analyzer selection and provides actionable solutions for diagnosing search issues.

Problem |

Symptom |

Example (Input & Output) |

Cause (Bad Analyzer) |

Solution (Good Analyzer) |

|---|---|---|---|---|

Over-tokenization |

Text queries for technical terms, identifiers, or URLs fail to find relevant documents. |

|

|

Use a |

Under-tokenization |

Search for a component of a multi-word phrase fails to return documents containing the full phrase. |

|

Analyzer with a |

Use a |

Language Mismatches |

Search results for a specific language are nonsensical or nonexistent. |

Chinese text: |

|

Use a language-specific analyzer, such as |

First question: Do you need to choose an analyzer?

For many use cases, you don’t need to do anything special. Let’s determine if you’re one of them.

Default behavior: standard analyzer

If you don’t specify an analyzer when using text retrieval features like full text search, Milvus automatically uses the standard analyzer.

The standard analyzer:

Splits text on spaces and punctuation

Converts all tokens to lowercase

Removes a built-in set of common English stop words and most punctuation

Example transformation:

Input: "The Milvus vector database is built for scale!"

Output: ['the', 'milvus', 'vector', 'database', 'is', 'built', 'scale']

Decision criteria: A quick check

Use this table to quickly determine if the default standard analyzer meets your needs. If it doesn’t, you’ll need to choose a different path.

Your Content |

Standard Analyzer OK? |

Why |

What You Need |

|---|---|---|---|

English blog posts |

✅ Yes |

Default behavior is sufficient. |

Use the default (no configuration needed). |

Chinese documents |

❌ No |

Chinese words have no spaces and will be treated as one token. |

Use a built-in |

Technical documentation |

❌ No |

Punctuation is stripped from terms like |

Create a custom analyzer with a |

Space-separated languages such as French/Spanish text |

⚠️ Maybe |

Accented characters ( |

A custom analyzer with the |

Multilingual or unknown languages |

❌ No |

The |

Use a custom analyzer with the Alternatively, consider configuring multi-language analyzers or a language identifier for more precise handling of multilingual content. |

If the default standard analyzer cannot meet your requirements, you need to implement a different one. You have two paths:

Path A: Use built-in analyzers

Built-in analyzers are pre-configured solutions for common languages. They are the easiest way to get started when the default standard analyzer isn’t a perfect fit.

Available built-in analyzers

Analyzer |

Language Support |

Components |

Notes |

|---|---|---|---|

Most space-separated languages (English, French, German, Spanish, etc.) |

|

General-purpose analyzer for initial text processing. For monolingual scenarios, language-specific analyzers (like |

|

Dedicated to English, which applies stemming and stop word removal for better English semantic matching |

|

Recommended for English-only content over |

|

Chinese |

|

Currently uses Simplified Chinese dictionary by default. |

Implementation example

To use a built-in analyzer, simply specify its type in the analyzer_params when defining your field schema.

# Using built-in English analyzer

analyzer_params = {

"type": "english"

}

# Applying analyzer config to target VARCHAR field in your collection schema

schema.add_field(

field_name='text',

datatype=DataType.VARCHAR,

max_length=200,

enable_analyzer=True,

analyzer_params=analyzer_params,

)

For detailed usage, refer to Full Text Search, Text Match, or Phrase Match.

Path B: Create a custom analyzer

When built-in options don’t meet your needs, you can create a custom analyzer by combining a tokenizer with a set of filters. This gives you full control over the text processing pipeline.

Step 1: Select the tokenizer based on language

Choose your tokenizer based on your content’s primary language:

Western languages

For space-separated languages, you have these options:

Tokenizer |

How It Works |

Best For |

Examples |

|---|---|---|---|

Splits text based on spaces and punctuation marks |

General text, mixed punctuation |

|

|

Splits only on whitespace characters |

Pre-processed content, user-formatted text |

|

East Asian languages

Dictionary-based languages require specialized tokenizers for proper word segmentation:

Chinese

Tokenizer |

How It Works |

Best For |

Examples |

|---|---|---|---|

Chinese dictionary-based segmentation with intelligent algorithm |

Recommended for Chinese content - combines dictionary with intelligent algorithms, specifically designed for Chinese |

|

|

Pure dictionary-based morphological analysis with Chinese dictionary (cc-cedict) |

Compared to |

|

Japanese and Korean

Language |

Tokenizer |

Dictionary Options |

Best For |

Examples |

|---|---|---|---|---|

Japanese |

ipadic (general-purpose), ipadic-neologd (modern terms), unidic (academic) |

Morphological analysis with proper noun handling |

|

|

Korean |

Korean morphological analysis |

|

Multilingual or unknown languages

For content where languages are unpredictable or mixed within documents:

Tokenizer |

How It Works |

Best For |

Examples |

|---|---|---|---|

Unicode-aware tokenization (International Components for Unicode) |

Mixed scripts, unknown languages, or when simple tokenization is sufficient |

|

When to use icu:

Mixed languages where language identification is impractical.

You don’t want the overhead of multi-language analyzers or the language identifier.

Content has a primary language with occasional foreign words that contribute little to the overall meaning (e.g., English text with sporadic brand names or technical terms in Japanese or French).

Alternative approaches: For more precise handling of multilingual content, consider using multi-language analyzers or the language identifier. For details, refer to Multi-language Analyzers or Language Identifier.

Step 2: Add filters for precision

After selecting your tokenizer, apply filters based on your specific search requirements and content characteristics.

Commonly used filters

These filters are essential for most space-separated language configurations (English, French, German, Spanish, etc.) and significantly improve search quality:

Filter |

How It Works |

When to Use |

Examples |

|---|---|---|---|

Convert all tokens to lowercase |

Universal - applies to all languages with case distinctions |

|

|

Reduce words to their root form |

Languages with word inflections (English, French, German, etc.) |

For English:

|

|

Remove common meaningless words |

Most languages - particularly effective for space-separated languages |

|

For East Asian languages (Chinese, Japanese, Korean, etc.), focus on language-specific filters instead. These languages typically use different approaches for text processing and may not benefit significantly from stemming.

Text normalization filters

These filters standardize text variations to improve matching consistency:

Filter |

How It Works |

When to Use |

Examples |

|---|---|---|---|

Convert accented characters to ASCII equivalents |

International content, user-generated content |

|

Token filtering

Control which tokens are preserved based on character content or length:

Filter |

How It Works |

When to Use |

Examples |

|---|---|---|---|

Remove standalone punctuation tokens |

Clean output from |

|

|

Keep only letters and numbers |

Technical content, clean text processing |

|

|

Remove tokens outside specified length range |

Filter noise (exccessively long tokens) |

|

|

Custom pattern-based filtering |

Domain-specific token requirements |

|

Language-specific filters

These filters handle specific language characteristics:

Filter |

Language |

How It Works |

Examples |

|---|---|---|---|

German |

Splits compound words into searchable components |

|

|

Chinese |

Keeps Chinese characters + alphanumeric |

|

|

Chinese |

Keeps only Chinese characters |

|

Step 3: Combine and implement

To create your custom analyzer, you define the tokenizer and a list of filters in the analyzer_params dictionary. The filters are applied in the order they are listed.

# Example: A custom analyzer for technical content

analyzer_params = {

"tokenizer": "whitespace",

"filter": ["lowercase", "alphanumonly"]

}

# Applying analyzer config to target VARCHAR field in your collection schema

schema.add_field(

field_name='text',

datatype=DataType.VARCHAR,

max_length=200,

enable_analyzer=True,

analyzer_params=analyzer_params,

)

Final: Test with run_analyzer

Always validate your configuration before applying to a collection:

# Sample text to analyze

sample_text = "The Milvus vector database is built for scale!"

# Run analyzer with the defined configuration

result = client.run_analyzer(sample_text, analyzer_params)

print("Analyzer output:", result)

Common issues to check:

Over-tokenization: Technical terms being split incorrectly

Under-tokenization: Phrases not being separated properly

Missing tokens: Important terms being filtered out

For detailed usage, refer to run_analyzer.

Recommended configurations by use case

This section provides recommended tokenizer and filter configurations for common use cases when working with analyzers in Milvus. Choose the combination that best matches your content type and search requirements.

Before applying an analyzer to your collection, we recommend you use run_analyzer to test and validate text analysis performance.

Languages with accent marks (French, Spanish, German, etc.)

Use a standard tokenizer with lowercase conversion, language-specific stemming, and stopword removal. This configuration also works for other European languages by modifying the language and stop_words parameters.

# French example

analyzer_params = {

"tokenizer": "standard",

"filter": [

"lowercase",

"asciifolding", # Handle accent marks

{

"type": "stemmer",

"language": "french"

},

{

"type": "stop",

"stop_words": ["_french_"]

}

]

}

# For other languages, modify the language parameter:

# "language": "spanish" for Spanish

# "language": "german" for German

# "stop_words": ["_spanish_"] or ["_german_"] accordingly

English content

For English text processing with comprehensive filtering. You can also use the built-in english analyzer:

analyzer_params = {

"tokenizer": "standard",

"filter": [

"lowercase",

{

"type": "stemmer",

"language": "english"

},

{

"type": "stop",

"stop_words": ["_english_"]

}

]

}

# Equivalent built-in shortcut:

analyzer_params = {

"type": "english"

}

Chinese content

Use the jieba tokenizer and apply a character filter to retain only Chinese characters, Latin letters, and digits.

analyzer_params = {

"tokenizer": "jieba",

"filter": ["cnalphanumonly"]

}

# Equivalent built-in shortcut:

analyzer_params = {

"type": "chinese"

}

For Simplified Chinese, cnalphanumonly removes all tokens except Chinese characters, alphanumeric text, and digits. This prevents punctuation from affecting search quality.

Japanese content

Use the lindera tokenizer with Japanese dictionary and filters to clean punctuation and control token length:

analyzer_params = {

"tokenizer": {

"type": "lindera",

"dict": "ipadic" # Options: ipadic, ipadic-neologd, unidic

},

"filter": [

"removepunct", # Remove standalone punctuation

{

"type": "length",

"min": 1,

"max": 20

}

]

}

Korean content

Similar to Japanese, using lindera tokenizer with Korean dictionary:

analyzer_params = {

"tokenizer": {

"type": "lindera",

"dict": "ko-dic"

},

"filter": [

"removepunct",

{

"type": "length",

"min": 1,

"max": 20

}

]

}

Mixed or multilingual content

When working with content that spans multiple languages or uses scripts unpredictably, start with the icu analyzer. This Unicode-aware analyzer handles mixed scripts and symbols effectively.

Basic multilingual configuration (no stemming):

analyzer_params = {

"tokenizer": "icu",

"filter": ["lowercase", "asciifolding"]

}

Advanced multilingual processing:

For better control over token behavior across different languages:

Use a multi-language analyzer configuration. For details, refer to Multi-language Analyzers.

Implement a language identifier on your content. For details, refer to Language Identifier.

Integrate with text retrieval features

After selecting your analyzer, you can integrate it with text retrieval features provided by Milvus.

Full text search

Analyzers directly impact BM25-based full text search through sparse vector generation. Use the same analyzer for both indexing and querying to ensure consistent tokenization. Language-specific analyzers generally provide better BM25 scoring than generic ones. For implementation details, refer to Full Text Search.

Text match

Text match operations perform exact token matching between queries and indexed content based on your analyzer output. For implementation details, refer to Text Match.

Phrase match

Phrase match requires consistent tokenization across multi-word expressions to maintain phrase boundaries and meaning. For implementation details, refer to Phrase Match.