GPU Index Overview

Building an index with GPU support in Milvus can significantly improve search performance in high-throughput and high-recall scenarios.

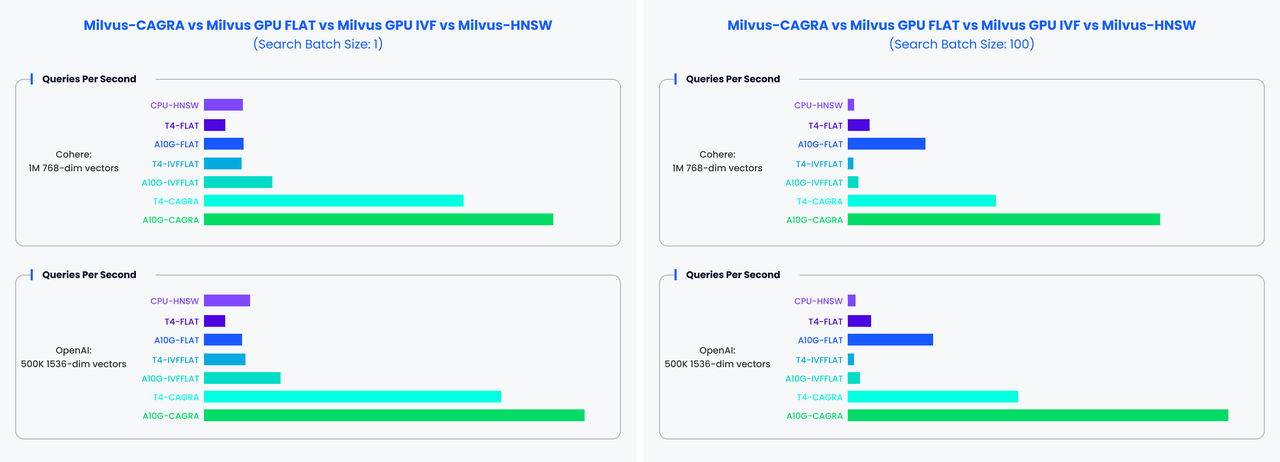

The following figure compares the query throughput (queries per second) of various index configurations across different hardware setups, vector datasets (Cohere and OpenAI), and search batch sizes, showing that GPU_CAGRA consistently outperforms other methods.

Gpu Index Performance

Gpu Index Performance

Limits

For

GPU_IVF_FLAT, the maximum value forlimitis 1,024.For

GPU_IVF_PQandGPU_CAGRA, the maximum value forlimitis 1,024.While there is no set

limitforGPU_BRUTE_FORCE, it is recommended not to exceed 4,096 to avoid potential performance issues.Currently, GPU indexes do not support

COSINEdistance. IfCOSINEdistance is required, data should be normalized first, and then inner product (IP) distance can be used as a substitute.Loading OOM protection for GPU indexes is not fully supported, too much data might lead to QueryNode crashes.

GPU indexes do not support search functions like range search and grouping search.

Supported GPU index types

The following table lists the GPU index types supported by Milvus.

Index Type |

Description |

Memory Usage |

|---|---|---|

GPU_CAGRA is a graph-based index optimized for GPUs, Using inference-grade GPUs to run the Milvus GPU version can be more cost-effective compared to using expensive training-grade GPUs. |

Memory usage is approximately 1.8 times that of the original vector data. |

|

GPU_IVF_FLAT is the most basic IVF index, and the encoded data stored in each unit is consistent with the original data. When conducting searches, note that you can set the top-k ( |

Requires memory equal to the size of the original data. |

|

GPU_IVF_PQ performs IVF index clustering before quantizing the product of vectors. When conducting searches, note that you can set the top-k ( |

Utilizes a smaller memory footprint, which depends on the compression parameter settings. |

|

GPU_BRUTE_FORCE is tailored for cases where extremely high recall is crucial, guaranteeing a recall of 1 by comparing each query with all vectors in the dataset. It only requires the metric type ( |

Requires memory equal to the size of the original data. |

Configure Milvus settings for GPU memory control

Milvus uses a global graphics memory pool to allocate GPU memory. It supports two parameters initMemSize and maxMemSize in Milvus config file. The pool size is initially set to initMemSize, and will be automatically expanded to maxMemSize after exceeding this limit.

The default initMemSize is 1/2 of the available GPU memory when Milvus starts, and the default maxMemSize is equal to all available GPU memory.

Up until Milvus 2.4.1, Milvus uses a unified GPU memory pool. For versions prior to 2.4.1, it was recommended to set both of the value to 0.

gpu:

initMemSize: 0 #set the initial memory pool size.

maxMemSize: 0 #maxMemSize sets the maximum memory usage limit. When the memory usage exceed initMemSize, Milvus will attempt to expand the memory pool.

From Milvus 2.4.1 onwards, the GPU memory pool is only used for temporary GPU data during searches. Therefore, it is recommended to set it to 2048 and 4096.

gpu:

initMemSize: 2048 #set the initial memory pool size.

maxMemSize: 4096 #maxMemSize sets the maximum memory usage limit. When the memory usage exceed initMemSize, Milvus will attempt to expand the memory pool.

To learn how to build a GPU index, refer to the specific guide for each index type.

FAQ

When is it appropriate to utilize a GPU index?

A GPU index is particularly beneficial in situations that demand high throughput or high recall. For instance, when dealing with large batches, the throughput of GPU indexing can surpass that of CPU indexing by as much as 100 times. In scenarios with smaller batches, GPU indexes still significantly outshine CPU indexes in terms of performance. Furthermore, if there’s a requirement for rapid data insertion, incorporating a GPU can substantially speed up the process of building indexes.

In which scenarios are GPU indexes like GPU_CAGRA, GPU_IVF_PQ, GPU_IVF_FLAT, and GPU_BRUTE_FORCE most suitable?

GPU_CAGRAindexes are ideal for scenarios that demand enhanced performance, albeit at the cost of consuming more memory. For environments where memory conservation is a priority, theGPU_IVF_PQindex can help minimize storage requirements, though this comes with a higher loss in precision. TheGPU_IVF_FLATindex serves as a balanced option, offering a compromise between performance and memory usage. Lastly, theGPU_BRUTE_FORCEindex is designed for exhaustive search operations, guaranteeing a recall rate of 1 by performing traversal searches.